Hashing

Weiss Ch. 5

Overview

Hash tables are a very clever storage and lookup mechanism with potential for

O(1) lookup and insertion. Algorithms that guarantee the lower bound

are

complex, and new hashing schemes with very good performance, like Cuckoo

and

Hopscotch (I'm not making any of this up, you know) are too complex

for

analysis and rely on experiments to justify their governing

heuristics.

Cryptographic hashing is a very heavy version of the idea that

concentrates on good hash functions, which are rather a secondary

consideration (at best) in hash tables. Crypto hashes are

found in public-key crypto signatures; Bob sends Alice his encrypted

signature and an encrypted hash value

along with his (differently) encrypted document. Alice decrypts the

document using her private key, and the signature using Bob's

public key (!). She applies the known hash function to the

decrypted document and

should derive the (now-decrypted)

hash value in Bob's signature. Else it's a wrong or

corrupted doc: e.g. signature and hash have been pasted on to a false

doc.

Our PPT goes over the practical, simple, common-or-garden academic

hashing ideas. The text also presents modern methods and the usual

sort of analysis we see for hashing schemes. This lecture is aimed at

outlines and the analysis.

Hashing Concepts

- Hash table

- Item Key

- Hash function -- "looks random", range is table size

- Coping w/ Collisions

- separate chaining -- exterior lists, trees, hash tables...

- linear probing -- no ex-table storage

- quadratic probing -- do.

- double hashing -- do.

- Enlarge table by rehashing: O(N) Amortized, so OK

- Analysis

- Worst-Case O(1) hashing: Perfect, Cuckoo, Hopscotch

- Universal hash functions (randomize the hash fn).

- Extendible hashing (for disk storage)

Analysis

Load factor λ of a hash-table =

(number-of-elements/table-size).

Thus in separate chaining with

lists:

- the average length of the lists is just λ.

- Time for unsuccessful search = O(1) hash function + λ.

- Time for successful search = O(1) hash function + 1 + λ/2.

- Or: number of "wrong" items in a table of N items and M lists (bins) is

(N-1)/M = λ - 1/M ≅ λ So our claim works out, and

makes sense...search on the average half a list of average length

λ

- So absolute table size doesn't matter but its population density,

λ,

certainly does. Keep it around one (λ ≅ 1).

Linear Probing -- Primary Clustering

No external storage, just probe forward to next table location.

Big hazard here is primary clustering, which is an obvious

phenomenon and weakness. If a "cluster" starts, (say 2 adjacent

elements fill up),

it's more likely an new entry will land on the cluster, after which it's

guaranteed to wind up at the end of the cluster, enlarging it...oops.

With linear probing, insertions and unsuccessful searches must take

the same amount of time, which turns out to be

.5(1 +1/(1-λ)2).

Successful searches should perform fewer probes on average than

unsuccessful, and in fact they take

.5(1 +1/(1-λ)). Calculations are "somewhat involved".

FOCS has zip on hashing analysis; Knuth vol 3

chapter 6.4 has about 46 pages on hashing, with diagrams, code, and

relatively a lot of analysis.

Random Probing -- What if No Clustering?

Imagine a purely random collision resolution strategy. Impractical but

analyzable. Fraction of empty cells is (1 - λ), so number we

expect to probe for an unsuccessful search is 1/ (1 - λ).

Now insertions happen after unsuccessful searches, so we can use this

formula to get average cost of successful search.

λ starts at 0 with an empty table and then increases; the

average performance thus starts well but degrades. Also notice that

items (quickly) inserted during a low value of λ are going to be easier

to find in a successful search: small number of probes both times.

Taking account of the varying number of probes we'll need takes an

integral over the values of λ at work during the insertions,

so the mean insertion time is:

I(λ) = ∫λ0 (1/(1-x)) dx =

1/λ ln(1/(1-λ)).

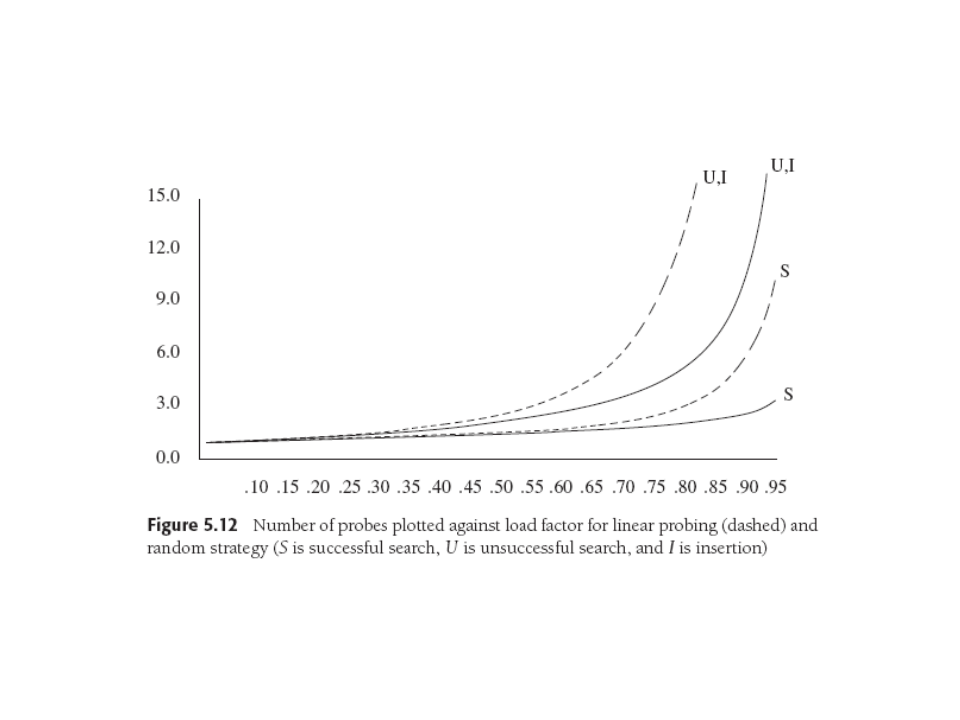

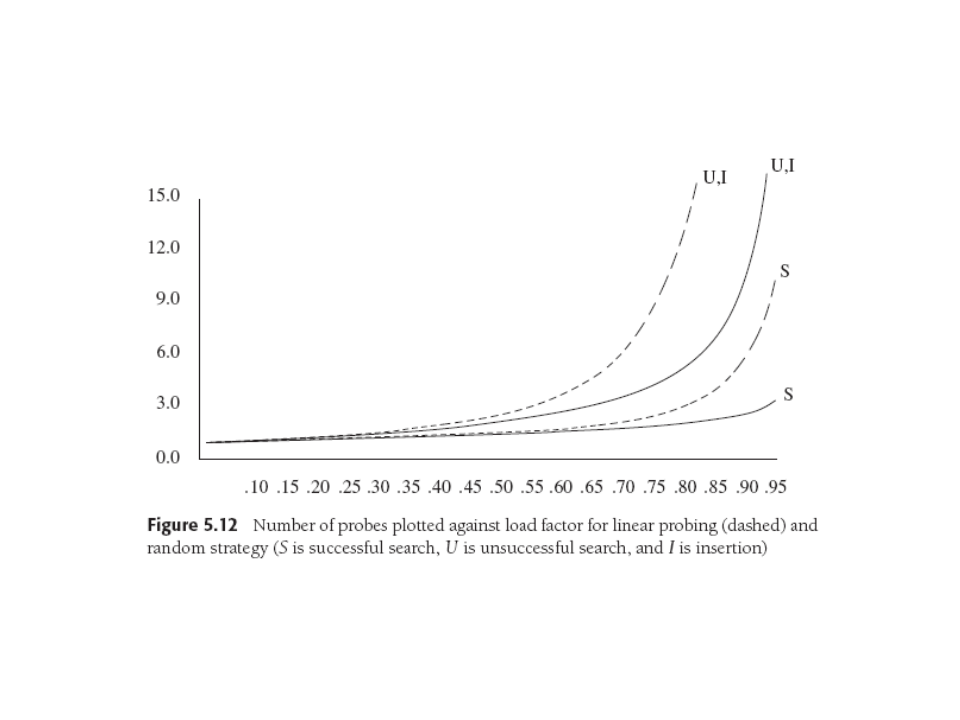

Both these ideal probing formulae are better than the clustering

versions

quoted before; we can see how clustering hurts us. Typical

graphs:

Number of probes vs. load factor for linear (dashed) and random

probing: S(uccessful), U(nsuccessful) = I(nsert).

Quadratic Probing

On collision, step quadratically, for instance by

1,4,9,16,...,i2

No guarantee of finding empty cell if λ > 1/2, or worse for

non-prime table sizes. Here's related result:

Theorem 5.1:

With prime table size and quadratic probing, a new element can be

inserted if λ ≤ 1/2.

Proof:

tablesize is odd prime > 3, h(x) is hash function, or

h0(x) first try, and h(x) + i2 for subsequent

tries.

Pick two locations h(x) + i2 and h(x) + j2,

where

0 ≤ i,j ≤ ⌊ tablesize/2 ⌋.

Now we're claiming

these locations are distinct, so let's assume they're not and look for

contradictions. That is, assume the locations are the same but i

≠ j. Then

h(x) + i2 = h(x) + j2 (mod tablesize)

i2 = j2 (mod tablesize)

i2 - j2 = 0 (mod tablesize)

(i-j) (i+j) = 0 (mod tablesize)

Since tablesize is prime, (i-j) or (i+j) = 0 (mod tablesize)

But they're distinct so first can't be true, and they're both less

than half the tablesize so the second can't be true. Thus the first

tablesize/2 locations are distinct under quadratic probing.

Double Hashing

Two hash functions, say h and g: if h(x) gives collision, then use

g(x), often by stepping by g(x), so look next at i*g(x).

Need to be a bit careful, (as always, actually) about hash functions:

g(x) shouldn't ever = 0, for instance. Non-prime table sizes can yield

non-probable elements by running out of 'new' locations early (e.g. in

W. p. 187). OK would be

g(x) = R - (x mod R), R a prime

smaller than table-size. Theoretically double hashing is interesting

since experimentally it works really well, just a bit more complex

with those two hash functions.

Rehashing

Various signals (load factor, failed insertion) trigger

rehashing, which is simply creating a new, bigger table,

hashing all elements of old table into the new one, and recycling the

old one. O(N), so a

pain but amortized over the O(N) inserts we've likely had already, its

cost ls low.

Hash Tables in the Java Standard Library

You get hashSet and hashMap abstractions, but need to provide "equals" and

"hashCode".

CB has found hash tables to be attractive substitutes for all sorts of

other data structures, and rather addictive: esp. in Perl.

Perfect Hashing

We want guaranteed, worst case O(1) access (routers, hardware,...)

Just for example, consider separate chaining with load factor of 1, to

see our problem.

f we distribute N balls into N bins randomly, what

is the expected number of balls in the fullest bin (i.e. the length of

the list in separate chaining)? The bad news is it's

Θ(logN/loglogN),

or nearly logN.

Balls and Bins

Stolen with thanks from Avrim at CMU.

Perfect hashing is a classical solution to this problem.

Perfect Hashing

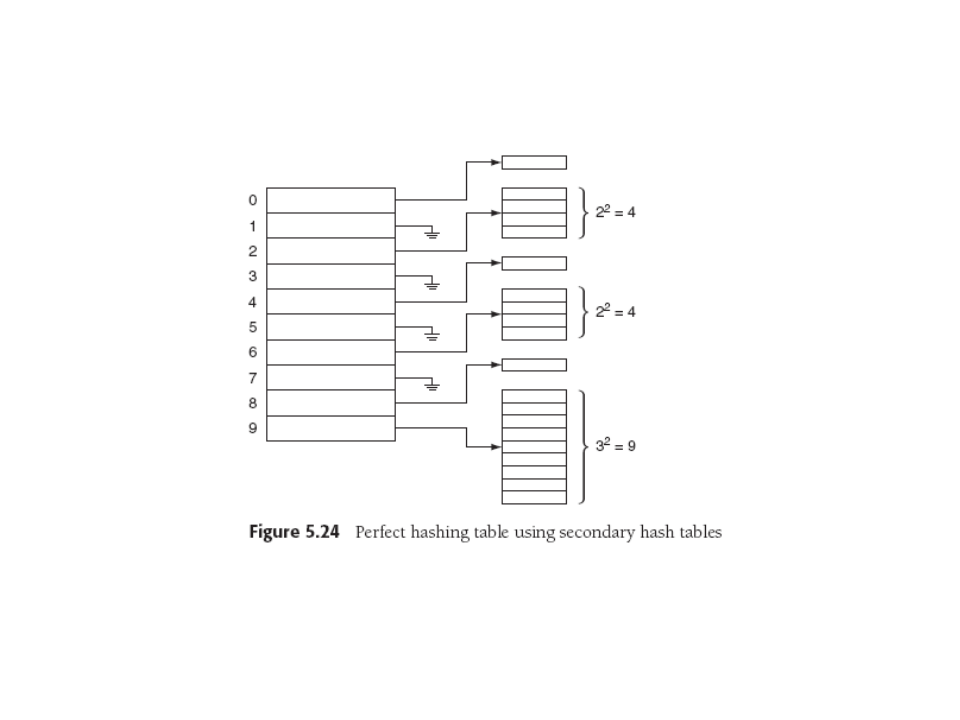

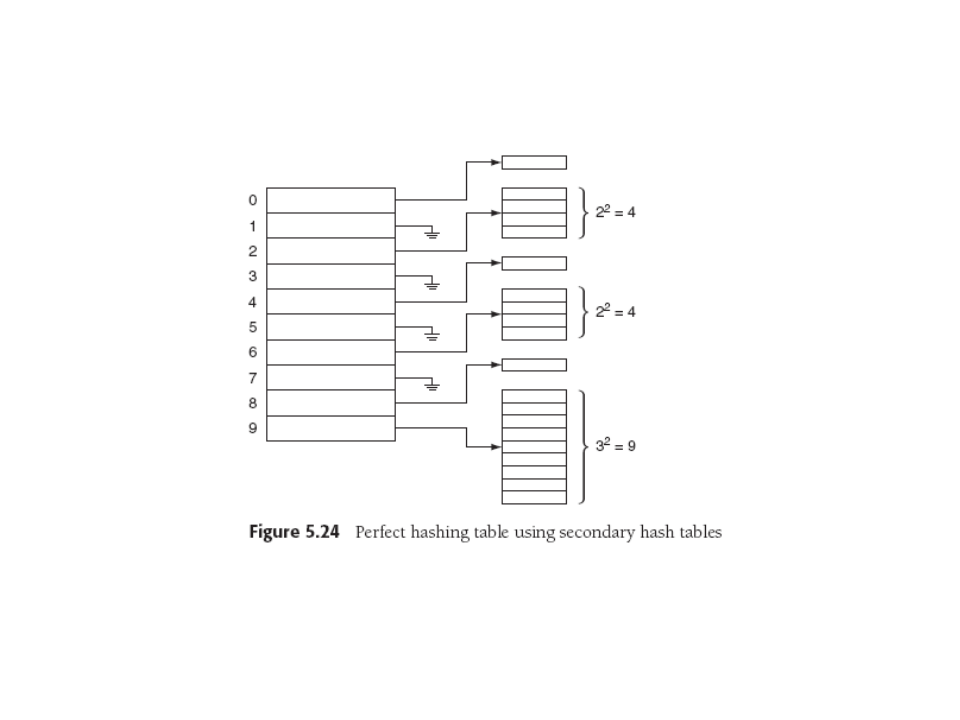

Assume we know the N table items in advance. We'll take the time to

construct a hash table with worst-case O(1) access performance.

If our separate chains, or

lists, have at most a constant number of items we're done. Also with

more lists, they'd be shorter. So how to make sure we don't need too

many lists, and how to make sure we don't just get unlucky anyway?

For second problem, assume table size of M, so M lists. We choose M

large enough (see below) so that there will be no collisions with

probability at least 1/2.

We can use the

principles of universal hashing (Weiss 5.8) to generate good hash

functions. In any case, we simply hash our N items into the table -- if we

get a collision we give up, empty the table out, and start all over

with a new hash function. If M, the table length, is big enough,

we can reduce the expected

number of attempts to 2, and all this work is charged off to the

insertion algorithm.

So how large must M be?

Weiss's theorem 5.2:

If N balls are placed into M = N2 bins, probability that no

bin has more than one ball is < 1/2. (easy, cute counting proof

p. 193).

Gulp. must our table size grow as N2 !??. Well... suppose we

just use N bins, but each bin points to a hash table!

Bwah-hah-ha! These only have to be as big as the square of the number

of collisions, and each is constructed with a different hash function

until IT is collision free. You can prove (again, simple counting)

Weiss Theorem 5.3, that the total size of the secondary hash tables

will be at most 2N.

Cuckoo, Hopscotch, Universal, and Extendible Hashing.

Last four sections of Chapter 5 are of varying difficulty. Extendible

hashing is very easy. Universal hashing is mostly modular mathematics,

not too hard. Cuckoo and Hopscotch algorithms are complex algorithms,

long sections enlivened by figures and code: good investment for

pledge party ice-breaking conversation.

Last update: 7/18/13