Preface to

Programming Language Pragmatics

Third Edition

A course in computer programming provides the typical

student’s first exposure to the field of computer science. Most

students in such a course will have used computers all their lives, for

email, games, web browsing, word processing, social networking, and a

host of other tasks, but

it is not until they write their first programs that they begin to

appreciate how applications work. After gaining a certain level

of facility as programmers (presumably with the help of a good course in

data structures and algorithms), the natural next step is to wonder how

programming languages work. This book provides an explanation. It

aims, quite simply, to be the most comprehensive and accurate languages

text available, in a style that is engaging and accessible to the

typical undergraduate. This aim reflects my conviction that students

will understand more, and enjoy the material more, if we explain what is

really going on.

In the conventional “systems” curriculum, the material beyond data

structures (and possibly computer organization) tends to be

compartmentalized into a host of separate subjects, including

programming languages,

compiler construction,

computer architecture,

operating systems,

networks,

parallel and distributed computing,

database management systems,

and possibly

software engineering,

object-oriented design,

graphics,

or user interface systems.

One problem with this compartmentalization is that the list of subjects

keeps growing, but the number of semesters in a Bachelor’s program does

not. More important, perhaps, many of the most interesting discoveries

in computer science occur at the boundaries between subjects.

The RISC revolution,

for example, forged an alliance between computer

architecture and compiler construction that has endured for 25 years.

More recently, renewed interest in virtual machines

has blurred the boundaries between the operating system kernel, the

compiler, and the language run-time system. Programs are now routinely

embedded in web pages, spreadsheets, and user interfaces. And with the

rise of multicore processors,

concurrency issues that used to be an issue only for systems programmers

have begun to impact everyday computing.

Increasingly, both educators and practitioners are recognizing the need

to emphasize these sorts of interactions. Within higher education in

particular there is a growing trend toward integration in the core

curriculum. Rather than give the typical student an in-depth look at

two or three narrow subjects, leaving holes in all the others, many

schools have revised the programming languages and computer organization

courses to cover a wider range of topics, with follow-on electives in

various specializations. This trend is very much in keeping with the

findings of the ACM/IEEE-CS Computing Curricula 2001

task force, which emphasize

the growth of the field,

the increasing need for breadth,

the importance of flexibility in curricular design,

and the overriding goal of graduating students who “have

a system-level perspective,

appreciate the interplay between theory and practice,

are familiar with common themes,

and can adapt over time as the field evolves”

[Sec. 11.1, adapted].

The first two editions of Programming Language Pragmatics (PLP-1e

and -2e) had the good fortune of riding this curricular trend. This

third edition continues and strengthens the emphasis on integrated

learning while retaining a central focus on programming language design.

At its core, PLP is a book about how programming languages work.

Rather than enumerate the details of many different languages, it

focuses on concepts that underlie all the languages the student is

likely to encounter, illustrating those concepts with a variety of

concrete examples, and exploring the tradeoffs that explain why

different languages were designed in different ways. Similarly, rather

than explain how to build a compiler or interpreter (a task few

programmers will undertake in its entirety), PLP focuses on what a

compiler does to an input program, and why. Language design and

implementation are thus explored together, with an emphasis on the ways

in which they interact.

In comparison to the second edition, PLP-3e provides

-

A new chapter on virtual machines

and run-time program management

-

A major revision of the chapter on concurrency

-

Numerous other reflections of recent changes in the field

-

Improvements inspired by instructor feedback or a fresh

consideration of familiar topics

Item 1 in this list is perhaps the most visible change. It reflects the

increasingly ubiquitous use of both managed code and scripting

languages. Chapter 15 begins with a general overview of

virtual machines and then takes a detailed look at the two most widely

used examples the JVM and the CLI. The chapter also covers dynamic

compilation, binary translation, reflection, debuggers, profilers, and

other aspects of the increasingly sophisticated run-time machinery found

in modern language systems.

Item 2 also reflects the evolving nature of the field. With the

proliferation of multicore processors,

concurrent languages have become increasingly important to mainstream

programmers, and the field is very much in flux. Changes to

Chapter 12 (Concurrency) include new sections on

nonblocking synchronization, memory consistency models, and software

transactional memory, as well as increased coverage of OpenMP, Erlang,

Java 5, and Parallel FX for .NET.

Other new material (Item 3) appears throughout the text.

Section  5.4.4 covers the multicore revolution from an

architectural perspective.

Section 8.7 covers event handling, in both sequential and

concurrent languages.

In Section 14.2, coverage of

5.4.4 covers the multicore revolution from an

architectural perspective.

Section 8.7 covers event handling, in both sequential and

concurrent languages.

In Section 14.2, coverage of gcc internals

includes not only RTL, but also the newer GENERIC and Gimple

intermediate forms.

References have been updated throughout to accommodate such recent

developments as Java 6, C++ ’0X, C# 3.0, F#, Fortran 2003,

Perl 6, and

Scheme R6RS.

Finally, Item 4 encompasses improvements to almost every section of the text.

Topics receiving particularly heavy updates include

the running example of Chapter 1 (moved from

Pascal/MIPS to C/x86);

bootstrapping (Section 1.4);

scanning (Section 2.2);

table-driven parsing (Sections 2.3.2

and 2.3.3);

closures (Sections 3.6.2,

3.6.3,

8.3.1,

8.4.4,

8.7.2,

and 9.2.3);

macros (Section 3.7);

evaluation order and strictness (Sections 6.6.2

and 10.4);

decimal types (Section 7.1.4);

array shape and allocation (Section 7.4.2);

parameter passing (Section 8.3);

inner (nested) classes (Section 9.2.3);

monads (Section 10.4.2);

and the Prolog examples of Chapter 11 (now ISO conformant).

8.4.4,

8.7.2,

and 9.2.3);

macros (Section 3.7);

evaluation order and strictness (Sections 6.6.2

and 10.4);

decimal types (Section 7.1.4);

array shape and allocation (Section 7.4.2);

parameter passing (Section 8.3);

inner (nested) classes (Section 9.2.3);

monads (Section 10.4.2);

and the Prolog examples of Chapter 11 (now ISO conformant).

To accommodate new material, coverage of some topics has been condensed.

Examples include

modules (Chapters 3 and 9),

loop control (Chapter 6),

packed types (Chapter 7),

the Smalltalk class hierarchy (Chapter 9),

metacircular interpretation (Chapter 10),

interconnection networks (Chapter 12),

and thread creation syntax (also Chapter 12).

Additional material has moved to the

companion

web site (formerly packaged on an included CD, and still indicated

with a CD icon).

This includes

all of Chapter 5 (Target Machine Architecture),

unions (Section  7.3.4),

dangling references (Section

7.3.4),

dangling references (Section  7.7.2),

message passing (Section

7.7.2),

message passing (Section  12.5),

and XSLT (Section

12.5),

and XSLT (Section  13.3.5).

Throughout the text, examples drawn from languages no longer in

widespread use have been replaced with more recent equivalents

wherever appropriate.

13.3.5).

Throughout the text, examples drawn from languages no longer in

widespread use have been replaced with more recent equivalents

wherever appropriate.

Overall, the printed text has grown by only some 30 pages, but there

are nearly 100 new pages on the companion site.

There are also 14 more “Design & Implementation” sidebars,

more than 70 new numbered examples,

a comparable number of new “Check Your Understanding” questions,

and more than 60 new end-of-chapter exercises and explorations.

Considerable effort has been invested in creating a consistent and

comprehensive index.

As in earlier editions, Morgan Kaufmann has maintained its commitment to

providing definitive texts at reasonable cost PLP-3e is less expensive

than competing alternatives, but larger and more comprehensive.

To minimize the physical size of the text, make way for new material,

and allow students to focus on the fundamentals when browsing,

approximately 350 pages of more advanced or peripheral material appears

on the companion site. Each companion section is represented in

the main text by a brief introduction to the subject and an “In

More Depth” paragraph that summarizes the elided material.

Note that placement of material on the companion site does not

constitute a

judgment about its technical importance. It simply reflects the fact

that there is more material worth covering than will fit in a single

volume or a single semester course. Since preferences and syllabi vary,

most instructors will probably want to assign reading from the companion

site, and

most will refrain from assigning certain sections of the printed text.

My intent has been to retain in print the material that is likely to be

covered in the largest number of courses.

Also contained on the companion site are

compilable copies of all significant code fragments found in the

text (in more than two dozen languages),

an extensive search facility,

and pointers to on-line resources.

Design & Implementation Sidebars

Like its predecessors, PLP-3e places heavy emphasis on the ways in which

language design constrains implementation options, and the ways in which

anticipated implementations have influenced language design. Many of

these connections and interactions are highlighted in some 135

“Design & Implementation” sidebars. A more detailed

introduction to these sidebars appears in Chapter 1.

A numbered list appears in

Appendix B.

Numbered and Titled Examples

Examples in PLP-3e are intimately woven into the flow of the

presentation. To make it easier to find specific examples, to remember

their content, and to refer to them in other contexts, a number and a

title for each is displayed in a marginal note. There are nearly 1000

such examples across the main text and the companion site. A detailed list

appears in Appendix C.

Exercise Plan

Review questions appear throughout the text at roughly 10-page

intervals, at the ends of major sections. These are based directly on

the preceding material, and have short, straightforward answers.

More detailed questions appear at the end of each chapter. These are

divided into Exercises and Explorations. The former are

generally more challenging than the per-section review questions, and

should be suitable for homework or brief projects. The latter are more

open-ended, requiring web or library research, substantial time

commitment, or the development of subjective opinion.

Solutions to many of the exercises (but not the

explorations) are available to registered instructors from a

password-protected web site; visit

textbooks.elsevier.com/web/9780123745149.

Programming Language Pragmatics covers almost all of the material

in the PL “knowledge units” of the

Computing Curricula 2001

report.

The book is an ideal fit for the CS 341

model course (Programming Language Design), and can also be used for

CS 340 (Compiler Construction) or CS 343 (Programming

Paradigms). It contains a significant fraction of the content of

CS 344 (Functional Programming) and CS 346 (Scripting

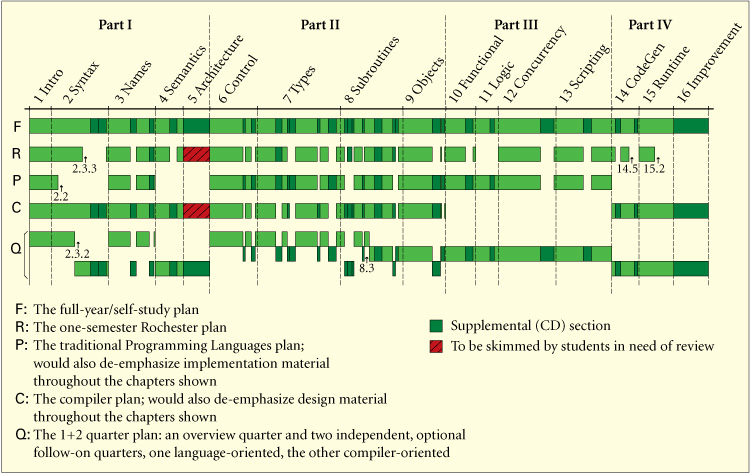

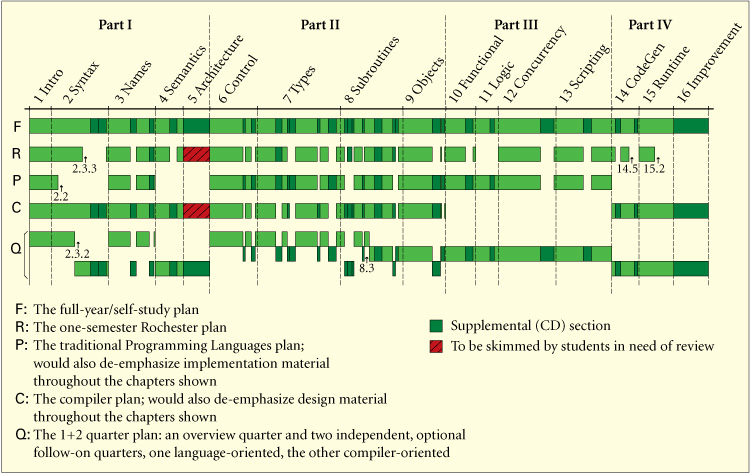

Languages). Figure 1 illustrates several possible

paths through the text.

Figure 1 Paths through the text.

Darker shaded regions indicate supplemental “In More

Depth” sections on the companion site. Section numbers are shown for

breaks that do not correspond to supplemental material.

For self-study, or for a full-year course (track

F in

Figure 1), I recommend working through

the book from start to finish, turning to the companion site as each “In More

Depth” section is encountered.

The one-semester course at the University of Rochester (track

R),

for which the text was originally developed, also covers most of the

book, but leaves out most of the companion sections, as well as bottom-up

parsing (2.3.3) and the second halves of Chapters 14 (Building a

Runnable Program) and 15 (Run-time Program Management).

Some chapters (1, 4, 5, 14, 15, 16)

have a heavier emphasis than others on

implementation issues. These can be reordered to a certain extent with

respect to the more design-oriented chapters. Many

students will already be familiar with much of the material in

Chapter 5, most likely from a course on computer

organization; hence the placement of the chapter on the companion site.

Some students may also be familiar with some of the material in

Chapter 2, perhaps from a course on automata theory.

Much of this chapter can then be read quickly as well, pausing perhaps

to dwell on such practical issues as recovery from syntax errors, or the

ways in which a scanner differs from a classical finite automaton.

A traditional programming languages course (track

P in

Figure 1) might leave out all of scanning and

parsing, plus all of Chapter 4. It would

also de-emphasize the more implementation-oriented material throughout.

In place of these it could add such design-oriented companion sections as

the ML type system (7.2.4),

multiple inheritance (9.5),

Smalltalk (9.6.1),

lambda calculus (10.6),

and

predicate calculus (11.3).

PLP has also been used at some schools for an introductory compiler

course (track

C

in Figure 1). The typical syllabus leaves out

most of Part III (Chapters 10

through 13), and de-emphasizes the more

design-oriented material throughout. In place of these it

includes all of scanning and parsing, Chapters 14

through 16, and a slightly different mix of other

companion sections.

For a school on the quarter system, an appealing option is to offer an

introductory one-quarter course and two optional follow-on courses

(track

Q

in Figure 1). The

introductory quarter might cover the main (non-companion) sections of

Chapters 1, 3,

6, and 7, plus the first halves of

Chapters 2 and 8.

A language-oriented follow-on quarter might cover the rest of

Chapter 8, all of Part III,

companion sections from Chapters 6

through 8, and possibly supplemental material

on formal semantics, type systems, or other related topics.

A compiler-oriented follow-on quarter might cover the rest of

Chapter 2;

Chapters 4 to 5 and 14 to 16,

companion sections from Chapters 3 and

8 to 9, and possibly

supplemental material on automatic code generation,

aggressive code improvement, programming tools, and so on.

Whatever the path through the text, I assume that the typical reader has

already acquired significant experience with at least one imperative

language. Exactly which language it is shouldn’t matter. Examples are

drawn from a wide variety of languages, but always with enough comments

and other discussion that readers without prior experience should be

able to understand easily.

Single-paragraph introductions to more than 50 different languages

appear in Appendix A.

Algorithms, when needed, are presented in an

informal pseudocode that should be self-explanatory. Real

programming language code is set in "typewriter" font.

Pseudocode is set in a sans-serif font.

In addition to supplemental sections, the companion site contains a

variety of other resources, including

-

Links to language reference manuals and tutorials on the Web

-

Links to open-source

compilers and interpreters

-

Complete source code for all nontrivial examples in the book

-

A search engine for both the main text and the companion-site-only content

Additional resources are available at

textbooks.elsevier.com/web/9780123745149

(you may wish to check back from time to time).

For instructors who have adopted the text, a password-protected page

provides access to

-

Editable PDF source for all the figures in the book

-

Editable PowerPoint slides

-

Solutions to most of the exercises

-

Suggestions for larger projects

In preparing the third edition I have been blessed with the generous

assistance of a very large number of people. Many provided errata or

other feedback on the second edition, among them

Gerald Baumgartner,

Manuel E. Bermudez,

William Calhoun,

Betty Cheng,

Yi Dai,

Eileen Head,

Nathan Hoot,

Peter Ketcham,

Antonio Leitao,

Jingke Li,

Annie Liu,

Dan Mullowney,

Arthur Nunes-Harwitt,

Zongyan Qiu,

Beverly Sanders,

David Sattari,

Parag Tamhankar,

Ray Toal,

Robert van Engelen,

Garrett Wollman,

and

Jingguo Yao.

In several cases, good advice from the 2004 class test went unheeded in

the second edition due to lack of time; I am glad to finally have the

chance to incorporate it here.

I also remain indebted to the many individuals acknowledged in the first

and second editions, and to the reviewers, adopters, and readers

who made those editions a success.

External reviewers for the third edition provided a wealth of useful

suggestions; my thanks to

Perry Alexander (University of Kansas),

Hans Boehm (HP Labs),

Stephen Edwards (Columbia University),

Tim Harris (Microsoft Research),

Eileen Head (Binghamton University),

Doug Lea (SUNY Oswego),

Jan-Willem Maessen (Sun Microsystems Laboratories),

Maged Michael (IBM Research),

Beverly Sanders (University of Florida),

Christopher Vickery (Queens College, City University of New York),

and

Garrett Wollman (MIT).

Hans, Doug, and Maged proofread parts of

Chapter 12 on very short notice;

Tim and Jan were equally helpful with parts of

Chapter 10.

Mike Spear helped vet the transactional memory implementation of

Figure 12.18.

Xiao Zhang provided pointers for Section 15.3.3.

Problems that remain in all these sections are entirely my own.

In preparing the third edition, I have drawn on 20 years of experience

teaching this material to upper-level undergraduates at the University

of Rochester. I am grateful to all my students for their enthusiasm and

feedback. My thanks as well to my colleagues and graduate students, and

to the department’s administrative, secretarial, and technical staff for

providing such a supportive and productive work environment. Finally,

my thanks to Barbara Ryder, whose forthright comments on the first

edition helped set me on the path to the second; I am honored to have

her as the author of the Foreword.

As they were on previous editions, the staff at Morgan Kaufmann have

been a genuine pleasure to work with, on both a professional and a

personal level. My thanks in particular to Nate McFadden, Senior

Development Editor, who shepherded both this and the previous edition

with unfailing patience, good humor, and a fine eye for detail; to

Marilyn Rash, who managed the book’s production; and to Denise Penrose,

whose gracious stewardship, first as Editor and then as Publisher, have

had a lasting impact.

Most important, I am indebted to my wife, Kelly, and our daughters, Erin

and Shannon, for their patience and support through endless months of

writing and revising. Computing is a fine profession, but family is

what really matters.

Michael L. Scott

Rochester, NY

December 2008

Back to the book home page

Back to the book home page

5.4.4 covers the multicore revolution from an

architectural perspective.

Section 8.7 covers event handling, in both sequential and

concurrent languages.

In Section 14.2, coverage of

5.4.4 covers the multicore revolution from an

architectural perspective.

Section 8.7 covers event handling, in both sequential and

concurrent languages.

In Section 14.2, coverage of

Back to the book home page

Back to the book home page