CVPR 2021

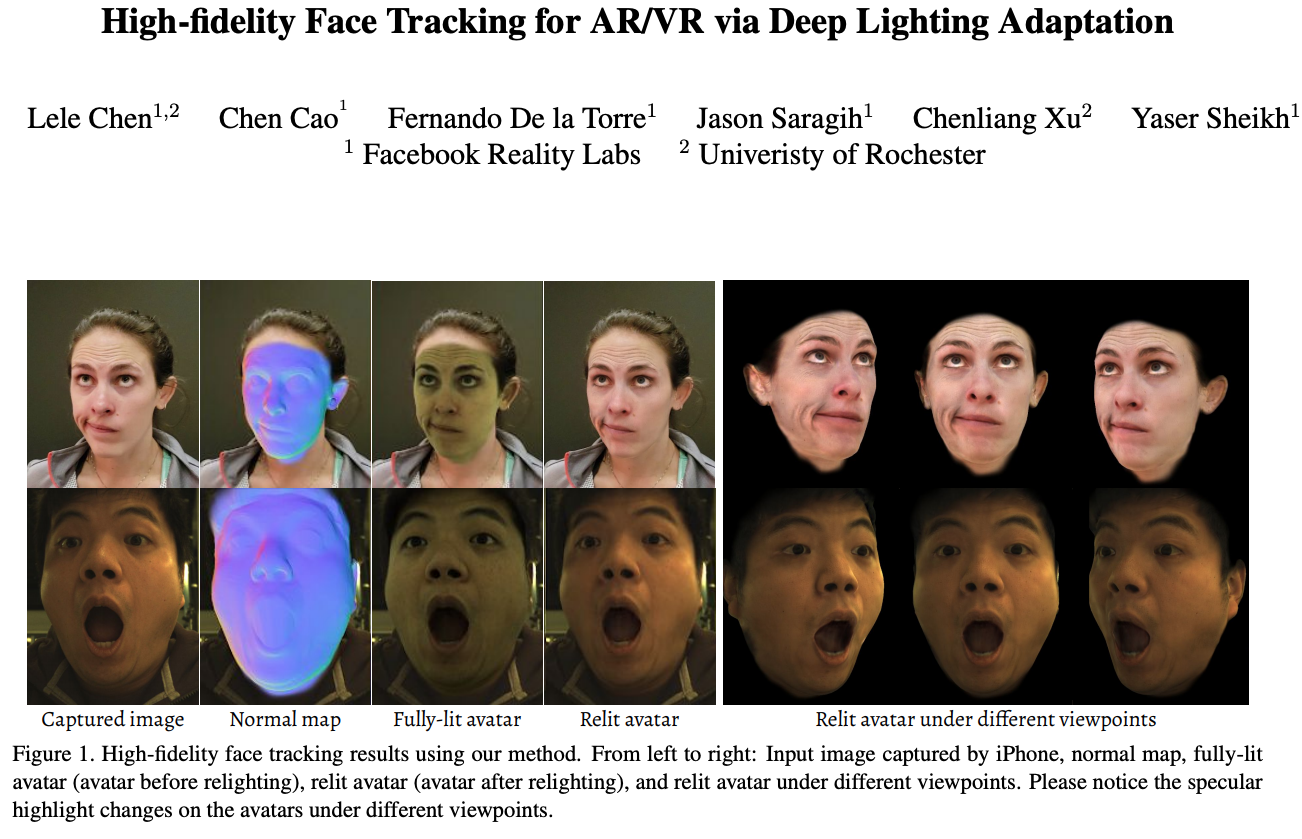

High-fidelity Face Tracking for AR/VR via Deep Lighting Adaptation

- 1 University of Rochester

- 2 Facebook Reality Labs

Abstract

3D video avatars can empower virtual communications

by providing compression, privacy, entertainment, and a

sense of presence in AR/VR. Best 3D photo-realistic AR/VR

avatars driven by video, that can minimize uncanny effects,

rely on person-specific models. However, existing personspecific photo-realistic 3D models are not robust to lighting,

hence their results typically miss subtle facial behaviors and

cause artifacts in the avatar. This is a major drawback for

the scalability of these models in communication systems

(e.g., Messenger, Skype, FaceTime) and AR/VR. This paper

addresses previous limitations by learning a deep learning

lighting model, that in combination with a high-quality 3D

face tracking algorithm, provides a method for subtle and

robust facial motion transfer from a regular video to a 3D

photo-realistic avatar. Extensive experimental validation

and comparisons to other state-of-the-art methods demonstrate the effectiveness of the proposed framework in realworld scenarios with variability in pose, expression, and

illumination.

Paper

Video

Citation

@InProceedings{Chen_2021_CVPR,

author = {Chen, Lele and Cao, Chen and De la Torre, Fernando and Saragih, Jason and Xu, Chenliang and Sheikh, Yaser},

title = {High-Fidelity Face Tracking for AR/VR via Deep Lighting Adaptation},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2021},

pages = {13059-13069}

}