Chenliang Xu

I'm grateful to the following entities for their support of my research:

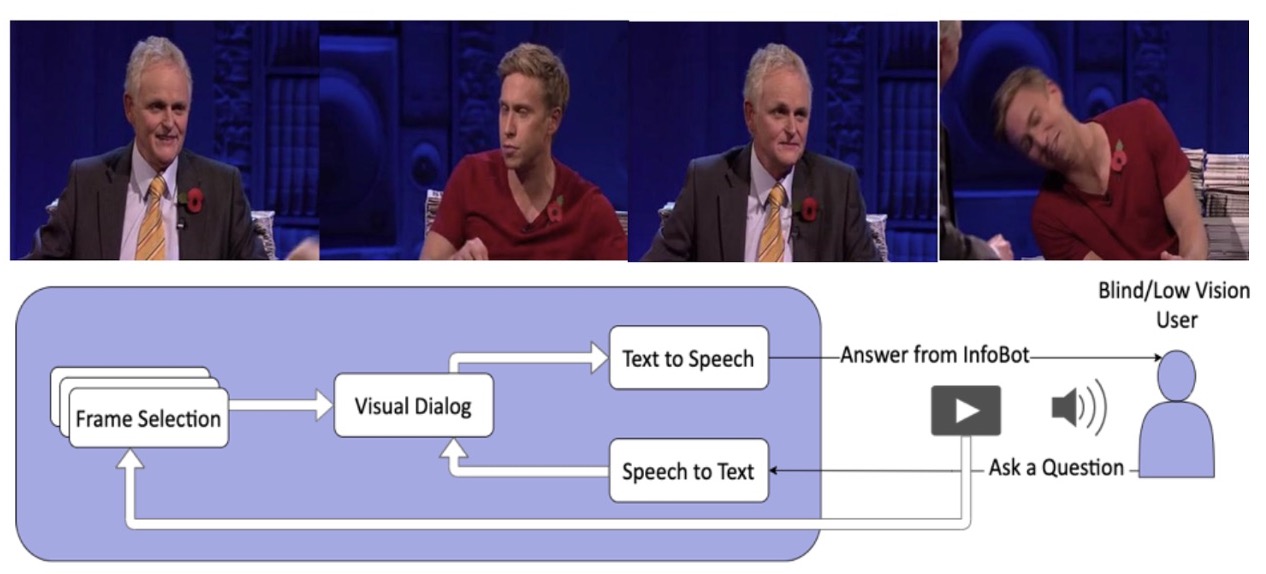

Automated Video Description for Blind and Low Vision Users

Automated Video Description for Blind and Low Vision Users

Perceptually-enabled Task Guidance

Perceptually-enabled Task Guidance

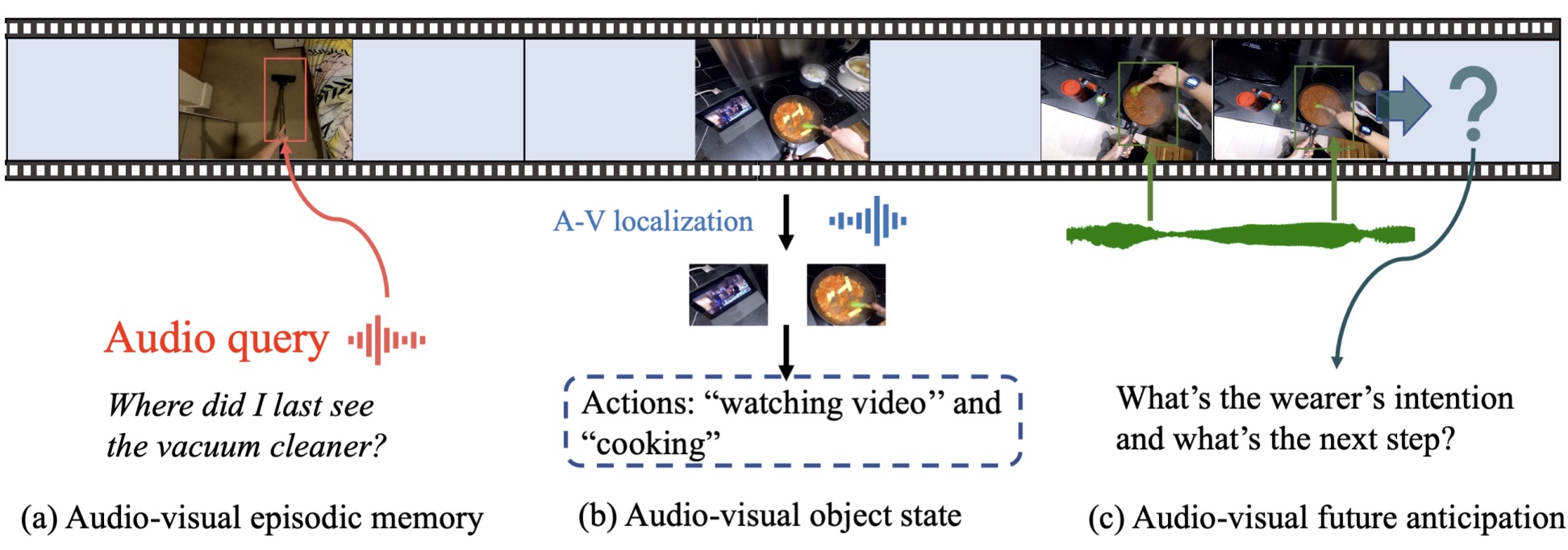

Egocentric 3D Audio-Visual Scene Understanding

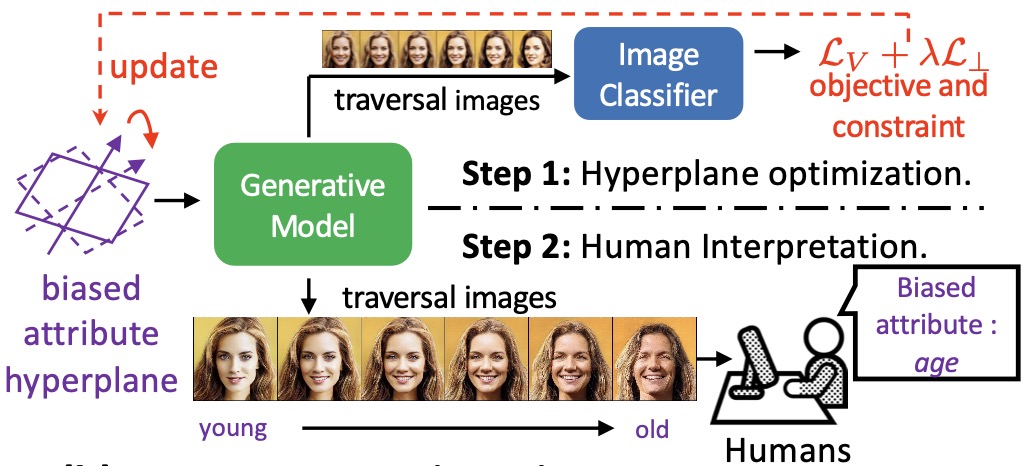

Identify Hidden Biases of AI Algorithms

Identify Hidden Biases of AI Algorithms

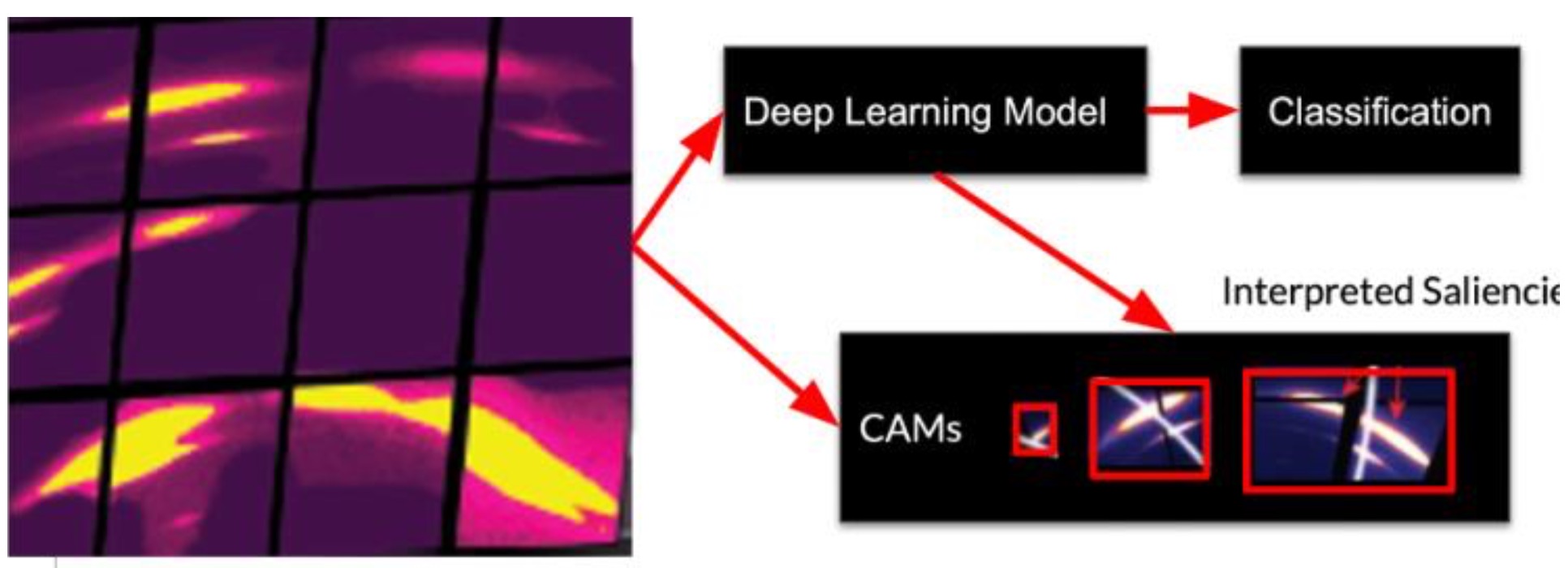

Inferring Lattice Dynamics from Temporal X-ray Diffraction Data

Inferring Lattice Dynamics from Temporal X-ray Diffraction Data

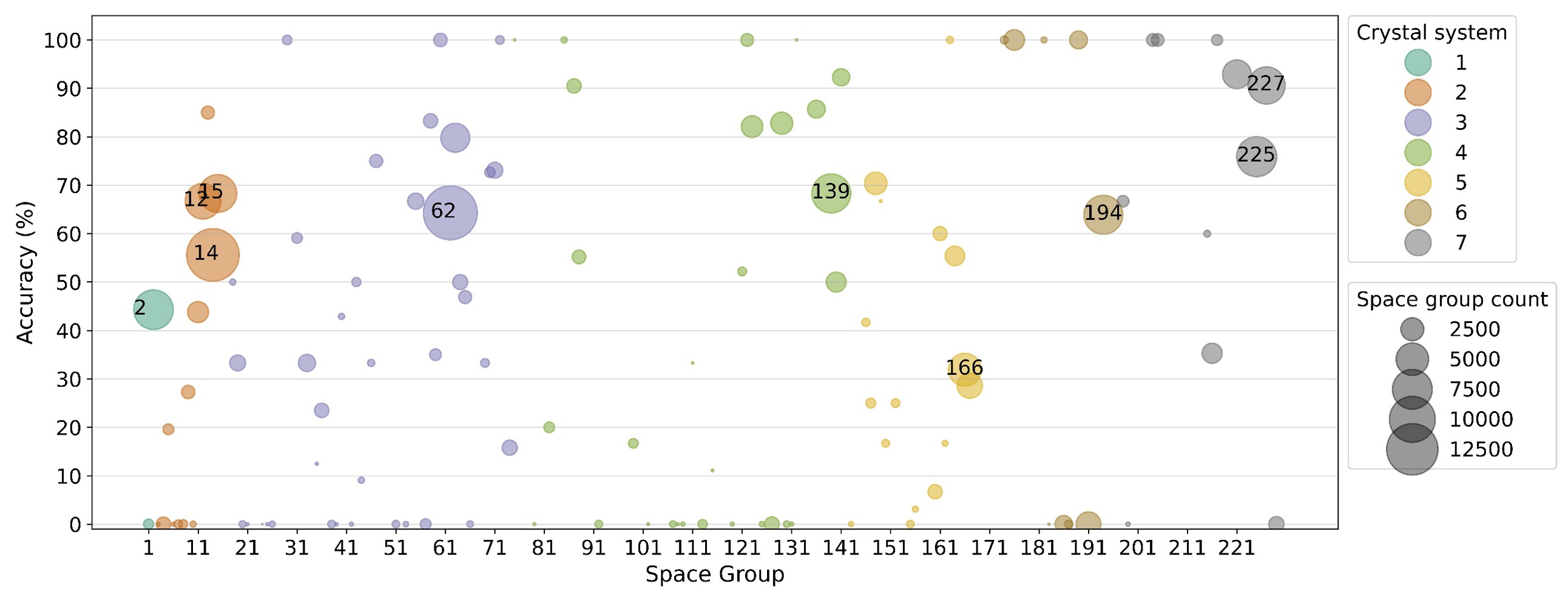

Time-resolved Classification of X-ray Diffraction Data

Time-resolved Classification of X-ray Diffraction Data