Chenliang Xu

Scalable Deep Bayesian Tensor Decomposition

Award Number: NSF IIS 1909912

Award Title: III: Small: Collaborative Research: Scalable Deep Bayesian Tensor Decomposition

Award Amount: $199,134.00

Duration: 10/01/2019 - 09/30/2022 (Estimated)

Overview of Goals and Challenges:

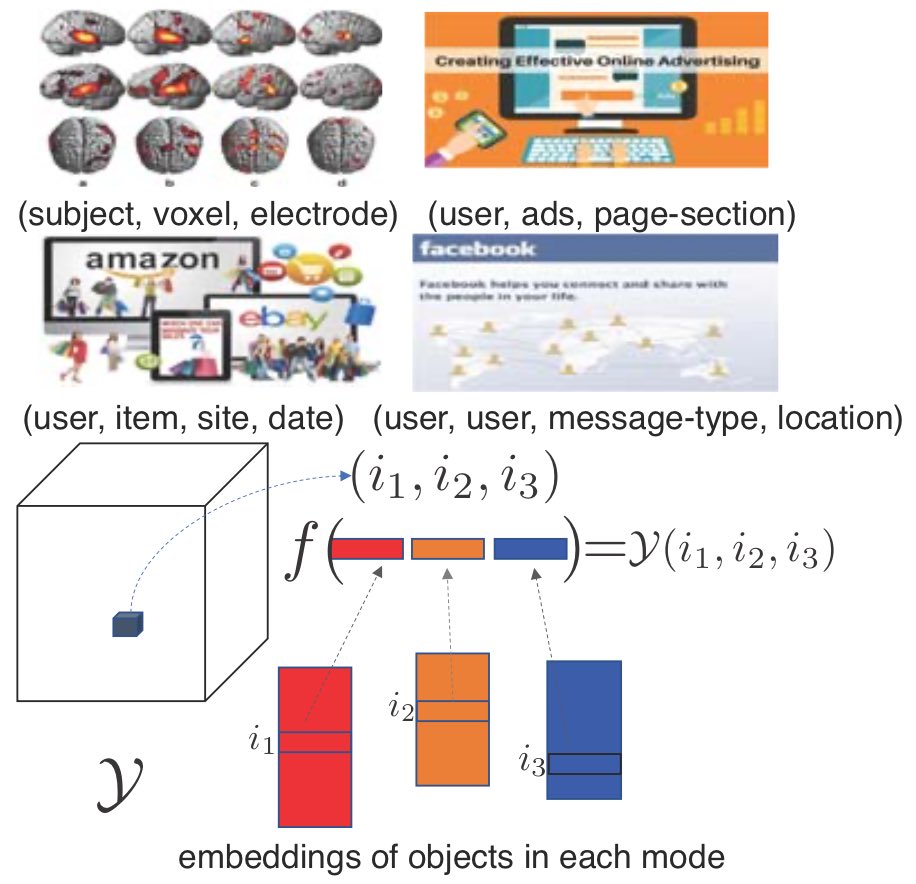

Many applications in the real world, such as online shopping, recommendation, social media and information security, involve interactions among different entities. For example, online shopping behaviors can be simply described by the interactions between customers, commodities and shopping web sites. These interactions are naturally represented by tensors, which are arrays of multiple dimensions. Each dimension represents a type of entities (e.g., customers or commodities), and each element describes a particular interaction (e.g, purchased/not purchased). The project aims to develop flexible and efficient tensor decomposition approaches that can discover a variety of complicated relationships between the entities in tensors, handle a tremendous amount of data from practical applications, and adapt to rapid data growth. The developed approaches can be used to promote many important prediction and knowledge discovery tasks, such as improving the recommendation accuracy, predicting advertisement click rates, understanding how misinformation propagation through social media, and detecting malicious cellphone apps.

Despite the success of the existing tensor decomposition approaches, they use multilinear decomposition forms or shallow kernels, and are incapable of capturing highly complicated relationships in data. However, complex and nonlinear relationships, effects and patterns are ubiquitous, due to the diversity and complexity of the practical applications. Furthermore, there is a lack of efficient, scalable nonlinear decomposition algorithms to handle static tensors nowadays at unprecedented scales, and dynamic tensors that grow fast and continuously. The project aims to develop scalable deep Bayesian tensor decomposition approaches that maximize the flexibility to capture all kinds of complex relationships, efficiently process static data at unprecedented scales and rapid data streams, and provide uncertainty quantification for both embedding estimations and predictions. The research will be accomplished through: (1) the design of new Bayesian tensor decomposition models that incorporate deep architectures to improve the capability of estimating intricate functions, (2) the development of decentralized, asynchronous learning algorithms to process extremely large-scale static tensors, (3) the development of online incremental learning algorithms to handle rapid data streams and to produce responsive updates upon receiving new data, without retraining from scratch, and (4) comprehensive evaluations on both synthetic and real-world big data. The proposed research will contribute a markedly improved tensor decomposition toolset that are powerful to estimate arbitrarily complex relationships, scalable to static tensors at unprecedented scales (e.g., billions of nodes and trillions of entries) and to fast data streams with efficient incremental updates. Moreover, as Bayesian approaches, the toolset are resilient to noise, provide posterior distributions for uncertainty quantification, and integrate all possible outcomes into robust predictions. Once the toolsets are available, the understanding of the high-order relationships in tensors, and the mining of associated patterns, such as communities and anomalies, will be enormously enhanced; the predictive performance for the quantify of interests, such as social links, click-through-rates, and recommendation, will be dramatically promoted.

Disclaimer: Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.

Point of Contact: Chenliang Xu

Date of Last Update: December, 2020